Section 3 Problem Set 3

- Due: Tuesday February 9 by noon CST.

- Upload your solutions to Moodle in a PDF.

- Please feel free to use RStudio for all row reductions.

- You can download the Rmd source file for this problem set.

The Problem Set covers sections 1.8, 1.9, 2.1, 2.2.

3.1 Properties of Linear Transformations

Here are the row reductions pf 4 matrices into reduced row echelon form. \[ \begin{array}{ll} A \longrightarrow \begin{bmatrix} 1 & 0 & 5 & -3 & 0\\ 0 & 1 & -2 & 8 & 0 \\ 0 & 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{bmatrix} \qquad & B \longrightarrow \begin{bmatrix} 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 \end{bmatrix} \\ \\ C \longrightarrow \begin{bmatrix} 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \end{bmatrix} & D \longrightarrow \begin{bmatrix} 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 1 \end{bmatrix} \end{array} \]

In each case, if \(T_M\) is the linear transformation given by the matrix product \(T_M(x) = M x\), where \(M\) is the given matrix, then \(T_M: \mathbb{R}^n \to \mathbb{R}^m\) is a transformation from domain \(\mathbb{R}^n\) to codomain (aka target) \(\mathbb{R}^m\).

Determine the appropriate values for \(n\) and \(m\), and decide whether \(T_M\) is one-to-one and/or onto. Submit your answers in table form, as shown below. \[ \begin{array} {|c|c|c|c|c|} \hline \text{transformation} & n & m & \text{one-to-one?} & \text{onto?} \\ \hline T_A &\phantom{\Big\vert XX}&\phantom{\Big\vert XX}&& \\ \hline T_B &\phantom{\Big\vert XX}&&& \\ \hline T_C &\phantom{\Big\vert XX}&&& \\ \hline T_D &\phantom{\Big\vert XX}&&& \\ \hline \end{array} \hskip5in \]

3.2 Partial Information about a Linear Transformation

We are given that \(T: \mathbb{R}^4 \rightarrow \mathbb{R}^3\) is a linear transformation such that: \[ T\left(\begin{bmatrix} 3 \\ ~2~ \\ 1 \\ 2 \end{bmatrix} \right)=\begin{bmatrix} ~2~ \\ 3 \\ 6 \end{bmatrix} \qquad\hbox{and}\qquad T\left(\begin{bmatrix}~~2 \\ -1 \\ 0 \\ -1 \end{bmatrix} \right)=\begin{bmatrix} 2 \\ ~0~ \\ 1 \end{bmatrix}. \] If that is all we know about \(T\), then do we have enough information to compute the value of \(T\) below? \[T\left(\begin{bmatrix} 5 \\ 8 \\ ~3~ \\ 8 \end{bmatrix} \right) = \hskip5in\] If yes, then compute it (showing how you do so). If no, then explain why not. Hint: try to write the third input vector as a linear combination of the first two.

3.3 House Renovations

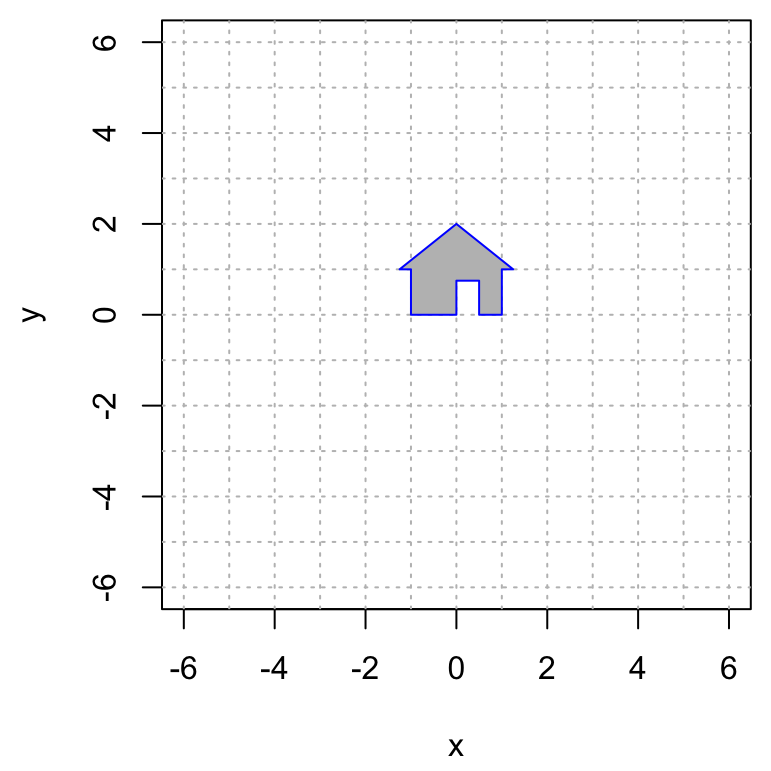

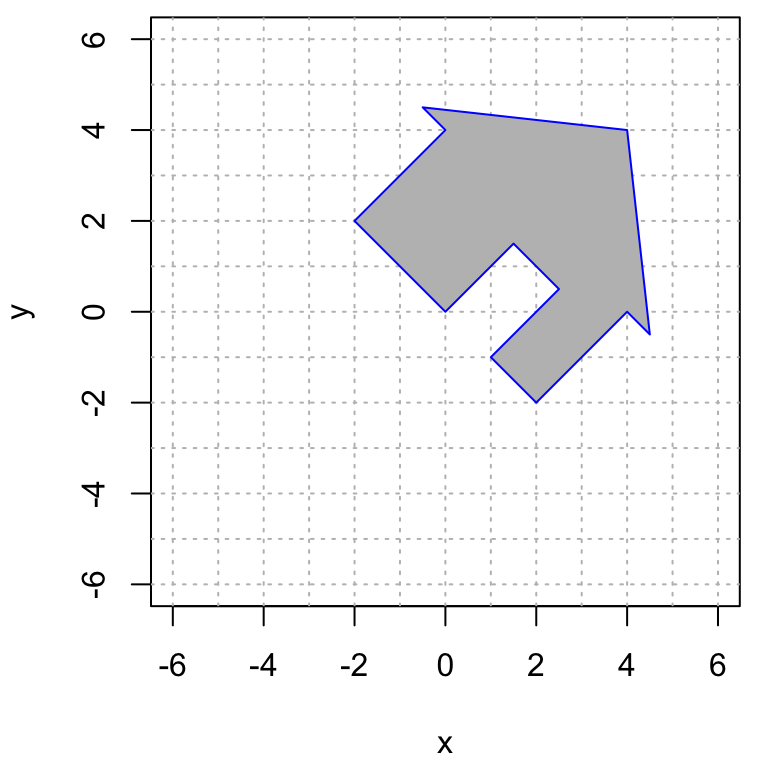

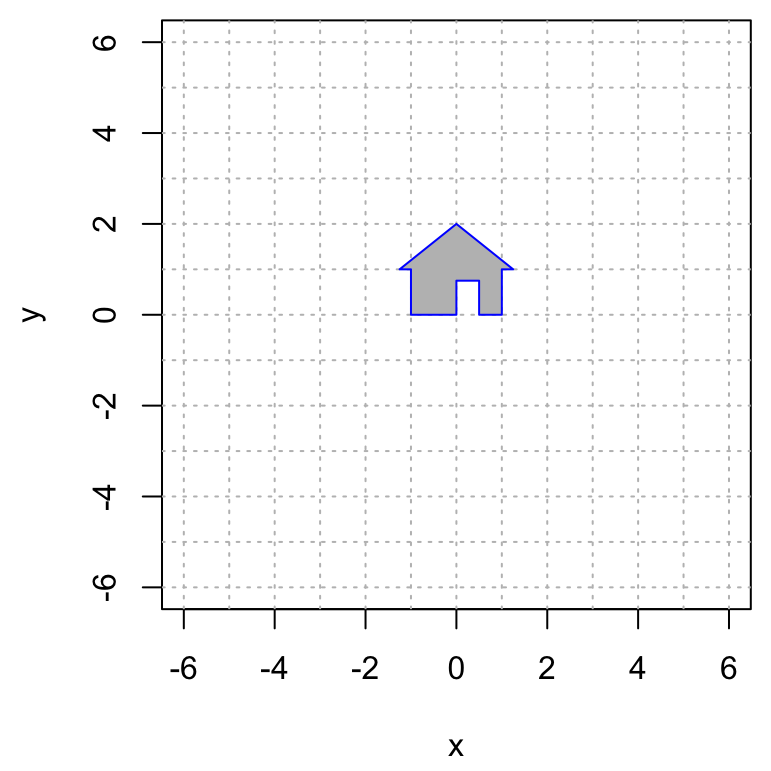

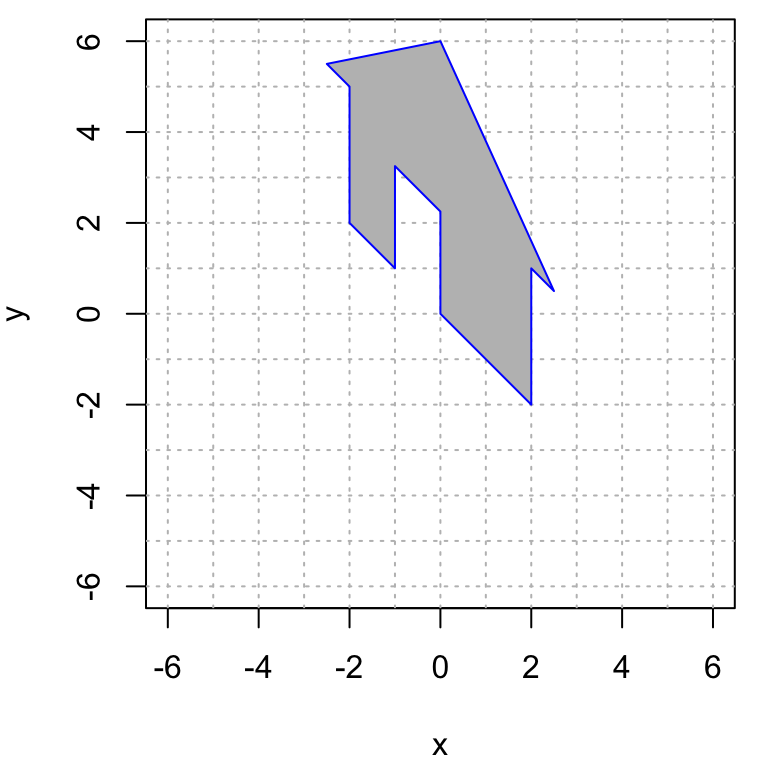

Find the matrix of a linear transformation \(T: \mathbb{R}^2 \to \mathbb{R}^2\) that performs the given transformation of my house. (Hint: use the base, the doorway and the peak of the roof as a guide.)

- Transformation #1

\(\qquad \qquad\)

\(\qquad \qquad\)

- Transformation #2

\(\qquad \qquad\)

\(\qquad \qquad\)

3.4 Matrix of a Nonlinear Transformation?

This problem illustrates what happens if you try to make the matrix of a transformation that is not linear. Consider the transformation \(T\) defined by \[ T \left( \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} \right) = \begin{bmatrix} x_1 + x_2^2 + x_3 \\ 2 x_2 + x_1 x_3 + 1 \\ 2 x_1 + 3 x_2 + x_3 \end{bmatrix} \]

This is not a linear transformation. Let’s see what happens if we compute its matrix anyway. Compute \(T(\mathbf{e}_1)\), \(T(\mathbf{e}_2)\), and \(T(\mathbf{e}_3)\), and put the vectors you get in the columns of a matrix \(A\). Then compute the product below: \[ \underbrace{\begin{bmatrix} \cdot & \cdot & \cdot \\ \cdot & \cdot & \cdot \\ \cdot & \cdot & \cdot \\ \end{bmatrix}}_{A} \begin{bmatrix} x_1 \\ x_ 2 \\ x_3 \end{bmatrix} = \] Explain how the result of this computation demonstrates that \(T\) is not linear.

3.5 A Proof

Let \(T: \mathbb{R}^n \to \mathbb{R}^m\) be a linear transformation. Suppose that \(\{v_1, v_2, v_3, v_4\}\) is a linearly independent set of vectors in \(\mathbb{R}^n\) but the set of images \(\{T(v_1), T(v_2), T(v_3), T(v_4)\}\) is a linearly dependent set in \(\mathbb{R}^m\). In the following steps, you will prove that \(T\) is not one-to-one.

Write out clearly, using the definition, what it means for \(\{v_1, v_2, v_3, v_4\}\) to be linearly independent.

Write out clearly, using the definition, what it means for \(\{T(v_1), T(v_2), T(v_3), T(v_4)\}\) to be linearly dependent.

Use the definition of linear transformation and parts (a) and (b) above to argue that \(T(x) = \vec{0}\) for some nonzero vector \(x \in \mathbb{R}^n\).

Explain why this tells us that \(T\) is not one-to-one.

3.6 Inner and Outer Products

We can also think of vectors as matrix. A column vector is an \(n \times 1\) matrix and a row vector is a \(1 \times n\) matrix.

- Compute the following products. These matrix products are called inner products (or dot products) of the vectors.

\[ \begin{bmatrix} 4 & -1 & 2 & 3\end{bmatrix} \begin{bmatrix} 1 \\ 2 \\1 \\3 \\\end{bmatrix} = \hskip3in \] \[ \begin{bmatrix} 4 & -1 & 2 & 3\end{bmatrix} \begin{bmatrix} 1 \\ 1 \\1 \\1 \\\end{bmatrix} = \hskip3in \] \[ \begin{bmatrix} 4 & -1 & 2 & 3\end{bmatrix} \begin{bmatrix} 2 \\ 5 \\ 0 \\ -1 \\\end{bmatrix} = \hskip3in \] b. Now compute the following products. These are called outer products.

\[ \begin{bmatrix} 1 \\ 2 \\1 \\3 \\\end{bmatrix} \begin{bmatrix} 1 & -5 & 2 & 3\end{bmatrix} = \hskip3in \]

\[ \begin{bmatrix} 1 \\ 2 \\1 \\3 \\\end{bmatrix} \begin{bmatrix} 1 & 1 & 1 & 1\end{bmatrix} =\hskip3in \]

- Row reduce both of the matrices that you get in part b (this should be easy to do by hand,but you can use R if you want to). How many pivots do you get? Explain why you always get this number of pivots when you row reduce an outer product.

3.7 Archaeological Seriation

The matrix \(A\) below is used in archaeological dating. Its rows correspond to four different grave sites \(G_1, G_2, G_3, G_4\) and its columns correspond to five types of pottery\(P_1, P_2, P_3, P_4, P_5\). There is a 1 in position \(i\)-\(j\) if pottery type \(P_j\) is found in grave \(G_i\) (and a 0 otherwise). \[ A=\begin{array}{c|ccccc} & P_1 & P_2 & P_3 & P_4 & P_5 \\ \hline G_1 & 1 & 1 & 0 & 1 & 1 \\ G_2 & 0 & 0 & 1 & 0 & 1 \\ G_3 & 0 & 1 & 1 & 0 & 1 \\ G_4 & 1 & 1 & 1 & 1 & 1 \\ \end{array} \]

Compute the matrix \(\mathbf{G} = A A^T\), where \(A^T\) is the transpose of \(A\), meaning that the rows and columns have been interchanged.

Give the meaning of the \(i\)-\(j\) entry of \(\mathbf{G}\) (the entry in row \(i\) and column \(j\)). State clearly the meaning of this entry using complete sentences (or sentence) and explain why it has this meaning.

3.8 Rental Car

Solve this problem using R and turn in a markdown file knitted to .html.

A group of Macalester alumni open a rental car company specializing in renting electric cars. As a start, they have opened offices in St. Paul, Rochester, and Duluth. Through market research they find that of the cars rented in St. Paul, 85% will get returned in St. Paul, 9% will get returned in Rochester, and 6% will get returned in Duluth. Of the cars rented in Rochester, 30% will get returned in St. Paul, 60% will get returned in Rochester, and 10% in Duluth. Of the cars rented in Duluth, 35% will get returned in St. Paul, 5% in Rochester, and 60% in Duluth. This information is represented in the matrix below.

## StP Roch Dul

## [1,] 0.85 0.3 0.35

## [2,] 0.09 0.6 0.05

## [3,] 0.06 0.1 0.60Such a matrix is called a probability matrix or a stochastic matrix because it contains numbers between 0 and 1 and each of its columns sum to 1.

The owners are trying to use this data to estimate how much of their fleet will be at each location on average in the long run. Assume that initially they locate 20 cars in each city. This can be recorded by the vector

v0 = c(20,20,20). Apply, M to v0, call this vector v1, and explain, using how the matrix-vector product works, why v1 represents the number of cars at each location one day later (for simplicity, we assume that each rental is for 1 day).Now apply M to v1 and call it v2. This should represent the number of cars at each location 2 days later. Also compute the square of the matrix M and call it M2. Confirm that M2 times v0 is the same as M times v1.

Write a for loop that applies M over and over again to see what happens to the distribution of cars in the long-run (we will learn how to do this in class but you can also probably just google it). Does this sequence stabilize or does it keep changing after each application? If it does stabilize, how long does it take to stabilize (to within 0.1 cars at each location).

Does the starting distribution matter? Try 4 different starting distributions (with a total of 60 cars) and see what the final distribution looks like in each case. For one of your 4 starting distributions, try all 60 cars at one of the locations.

3.9 Adjacency Matrix

You can do this problem in R or by hand. Consider the matrix \(A\) defined here

A = rbind(c( 0 , 1 , 0 , 1 , 1 ,0), c(1 , 0 , 1 , 1 , 0, 0 ),c( 0 , 1 , 0 , 1 , 1, 0 ),

c( 1 , 1 , 1 , 0 , 1, 0 ),c( 1 , 0 , 1 , 1 , 0, 1 ), c(0, 0, 0, 0, 1, 0))

A## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0 1 0 1 1 0

## [2,] 1 0 1 1 0 0

## [3,] 0 1 0 1 1 0

## [4,] 1 1 1 0 1 0

## [5,] 1 0 1 1 0 1

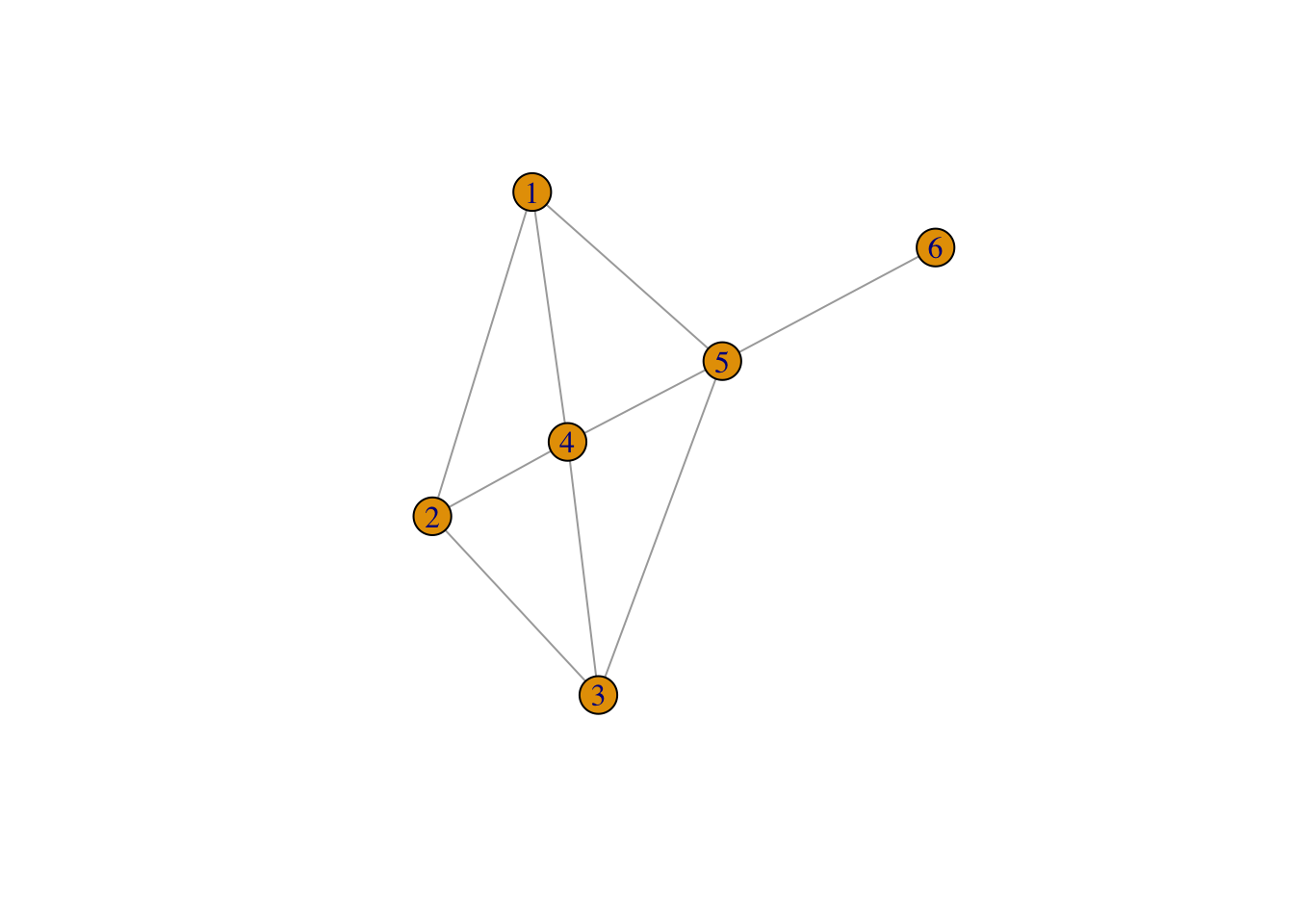

## [6,] 0 0 0 0 1 0This matrix represents the connections in the network diagram below. There is a 1 in position \((i,j)\) of the matrix if there is a connection (an edge) between vertex \(i\) and vertex \(j\) and there is a 0 if there is not.

Compute \(A v\) where \(v\) is the vector of all 1’s. Explain what this new vector tells us about the graph.

Compute \(A^2 = A A\), the square of the matrix \(A\).

Look at the \((2,5)\) entry of \(A^2\). Explain what this entry says about connections in the network. Do the same for the \((2,3)\) and the \((2,6)\) entry of \(A^2\).