Section 30 Quiz 4 Review

30.1 Overview

Our fourth quiz has two goals

- It will cover the new material from Sections 6.1-6.5.

- It will have some comprehensive material.

The comprehensive material will not be tricky. My goal is to be sure that you understand the fundamentals of the course.

The best way to study is to do practice problems. The Quiz will have calculation problems (like Edfinity) and more conceptual problems (like the problem sets). Here are some ways to practice:

- Make sure that you have mastered the Vocabulary, Skills and Concepts listed below.

- Look over the Edfinity homework assingments

- Do practice problems from the Edfinity Practice assignments. These allow you to “Practice Similar” by generating new variations of the same problem.

- Redo the Jamboard problems

- Try to resolve the Problem Sets and compare your answers to the solutions.

- Do the practice problems below. Compare your answers to the solutions.

30.2 Vocabulary, Concepts and Skills

See the Week 7-8 Learning Goals for the list of vocabulary, concepts and skills.

30.3 Comprehensive Review

Here are some terms and ideas that you should know:

- Pivot position.

- Elementary row operations.

- Ways to compute and think about \(A v\) in both words and symbols.

- Linear combination.

- \(Span(v_1, . . . , v_k).\) In particular, you should be able to visualize \(Span(v)\), \(Span(u,v)\) and give geometric interpretations of these sets in \(\mathbb{R}^2\) or \(\mathbb{R}^3\).

- Linearly independent and linearly dependent.

- Linear transformation.

- Domain, codomain, image, range, onto, and one-to-one.

- The transpose of a matrix, the inverse of a matrix, invertible matrix.

- Subspace.

- Null space, column space, and row space of a matrix.

- Kernel and range of a linear transformation.

- Basis and dimension.

- Rank.

- Eigenvalue, eigenvector, eigenspace.

- Characteristic polynomial and characteristic equation.

- Diagonalizable matrix.

- Dot product, length of a vector, angle between vectors, cosine similarity

- Orthogonal vectors, orthogonal spaces.

- Orthogonal complement of a subspace.

- Orthogonal basis, orthonormal basis, orthogonal matrix.

- Orthogonal projection, least squares solutions.

30.4 Skills

Form an augmented matrix and reduce a matrix or augmented matrix into row echelon or reduced row echelon form. Determine whether a given matrix is in either of those forms. Determine whether a particular form of a matrix is a possible row echelon or reduced echelon form.

Determine whether a system is consistent and if it has a unique solution. Write the general solution in parametric vector form. Describe the set of solutions geometrically.

Interpret a system of equations as (i) a vector equation (ii) a matrix equation.

Determine when a vector is in a subset spanned by specified vectors. Exhibit a vector as a linear combination of specified vectors. Determine whether a specified vector is in the range of a linear transformation.

Determine whether the columns of an \(m \times n\) matrix span \(\mathbb{R}^m\). Determine whether the columns are linearly independent.

Compute a matrix-vector product, and interpret it as a linear combination of the columns of \(A\). Use linearity of matrix multiplication to compute \(A(u + v)\) or \(A(c u)\).

Find the matrix of a linear transformation.

Determine whether a transformation is linear. Determine whether a linear transformation \(T( x)=A x\) is one-to-one or onto, using the properties of the matrix \(A\).

Determine whether a subset of vectors is a subspace.

Determine whether a set of vectors is linearly independent and whether it is a basis for some subspace or vector space. Find a basis for a vector space, or for the null space or column space of a matrix, or for the kernel or range of a linear transformation. Find the dimension of a vector space or subspace. Find the dimension of the null space (number of free variables) and column space (number of pivot columns) of a matrix.

Find and interpret the rank of a matrix.

Calculate the characteristic equation, eigenvalues, and eigenvectors of a square matrix. Find eigenvectors for a specific eigenvalue. Check if a vector is an eigenvector of a given matrix.

Determine whether a square matrix is diagonalizable. Factor a diagonalizable matrix into \(A=PDP^{-1}\), where \(D\) is a diagonal matrix.

Use eigenvalues and eigenvectors to analyze the long-term behavior of discrete dynamical systems.

Find the length of a vector, the distance between two vectors, or the angle between two vectors.

Determine whether a set of vectors are orthogonal. Determine whether a vector is orthogonal to a subspace or whether two subspaces are orthogonal, by checking whether their basis vectors are orthogonal.

Find the orthogonal projection of (i) one vector onto another vector, or (ii) one vector onto a subspace (using an orthogonal basis for the subspace). Find the distance between a vector and a space (by computing the residual).

Set up the matrix equation to find the ``best-fitting’’ function to a set of data using least squares. Interpret the normal equations \(A^{\top}A x = A^{\top}b\). Find the least squares approximation by solving the normal equations \(\hat{x}=(A^{\top}A)^{-1}A^{\top}b\).

30.5 Practice Problems

30.5.1

Let \(\mathsf{v} = \begin{bmatrix}1 \\ -1 \\ 1 \end{bmatrix}\) and \(\mathsf{w}= \begin{bmatrix}5 \\ 2 \\ 3 \end{bmatrix}\).

Find \(\| \mathsf{v} \|\) and \(\| \mathsf{w} \|\).

Find the distance between \(\mathsf{v}\) and \(\mathsf{w}\).

Find the cosine of the angle between \(\mathsf{v}\) and \(\mathsf{w}\).

Find \(\mbox{proj}_{\mathsf{v}} \mathsf{w}\).

Let \(W=\mbox{span} (\mathsf{v}, \mathsf{w})\). Use the residual from the previous projection to create an orthonormal basis \(\mathsf{u}_1, \mathsf{u}_2\) for \(W\) such that \(\mathsf{u}_1\) is a vector in the same direction as \(\mathsf{v}\).

30.5.2

Let \(\mathsf{u} \neq 0\) be a vector in \(\mathbb{R}^n\). Define the function \(T: \mathbb{R}^n \rightarrow \mathbb{R}^n\) by \(T(\mathsf{x}) = \mbox{proj}_{\mathsf{u}} \mathsf{x}\). Recall that the kernel of \(T\) is the subspace \(\mbox{ker}(T) = \{ \mathsf{x} \in \mathbb{R}^n \mid T(x) = \mathbf{0} \}\). Describe \(\mbox{ker}(T)\) as explicitly as you can.

30.5.3

The vectors \(\mathsf{u}_1, \mathsf{u}_2\) form an orthonormal basis of a subspace \(W\) of \(\mathbb{R}^4\). Find the projection of \(\mathsf{v}\) onto \(W\) and determine how close \(\mathsf{v}\) is to \(W\). \[ \mathsf{u}_1 = \frac{1}{2}\begin{bmatrix} 1\\ -1\\ -1\\ 1 \end{bmatrix}, \quad \mathsf{u}_2 = \frac{1}{2}\begin{bmatrix} 1\\ -1\\ 1\\ -1 \end{bmatrix}, \quad \mathsf{v} = \begin{bmatrix} 2\\ 2\\ 4\\ 2 \end{bmatrix} \]

30.5.4

Consider vectors \(\mathsf{v}_1 = \begin{bmatrix} 1 \\ 1 \\-1 \end{bmatrix}\) and \(\mathsf{v}_2= \begin{bmatrix} 1 \\ 2 \\ 3 \end{bmatrix}\) in \(\mathbb{R}^3\). Let \(W=\mbox{span}(\mathsf{v}_1, \mathsf{v}_2)\).

Show that \(\mathsf{v}_1\) and \(\mathsf{v}_2\) are orthogonal.

Find a basis for \(W^{\perp}\).

Use orthogonal projections to find the representation of \(\mathsf{y} = \begin{bmatrix} 8 \\ 0 \\ 2 \end{bmatrix}\) as \(\mathsf{y} = \hat{\mathsf{y}} + \mathsf{z}\) where \(\hat{\mathsf{y}} \in W\) and \(\mathsf{z} \in W^{\perp}\).

30.5.5

Let \(W\) be the span of the vectors \[ \begin{bmatrix} 1 \\ -2 \\ 1 \\ 0 \\1 \end{bmatrix}, \quad \begin{bmatrix} -1 \\ 3 \\ -1 \\ 1 \\ -1 \end{bmatrix}, \quad \begin{bmatrix} 0 \\ 0 \\ 1 \\ 3 \\1 \end{bmatrix}, \quad \begin{bmatrix} 0 \\ 2 \\ 0 \\ 0 \\4 \end{bmatrix} \]

- Find a basis for \(W\). What is the dimension of this subspace?

- Find a basis for \(W^{\perp}\)

30.5.6

Consider the system \(A \mathsf{x} = \mathsf{b}\) given by \[ \begin{bmatrix} 1 & 1 & 1 \\ 1 & 2 & -1 \\ 1 & 1 & -1 \\ 1 & 2 & 1 \end{bmatrix} \begin{bmatrix} x_1\\ x_2 \\ x_3 \end{bmatrix} = \begin{bmatrix} 4\\ 1 \\ -2 \\ -1 \end{bmatrix}. \]

- Show that this system is inconsistent.

- Find the projected value \(\hat{\mathsf{b}}\), and the residual \(\mathsf{z}\).

- How close is your approximate solution to the desired target vector?

30.5.7

Here is an inconsistent system of equations: \[ \begin{bmatrix} 1 & 2 \\ 1 & 2 \\ 1 & -1 \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} 6\\ 4 \\ -4 \end{bmatrix} \]

State the normal equations for this problem (be sure to do all of the necessary matrix multiplications).

Find the least squares solution to the problem.

How close is your approximate solution to the desired target vector?

30.5.8

According to the COVID Tracking Project, Minnesota had \(54,463\) positive COVID-19 cases between March 6 and 31 July, 2020. As of 16 December, 2020, that count has reached \(386,412\).

The vector covid.mn lists the total number of new COVID-19 cases in Minnesota between August 1, 2020 and December 16, 2020 (on top of the previously reported \(54,463\)).

covid.start = 54463

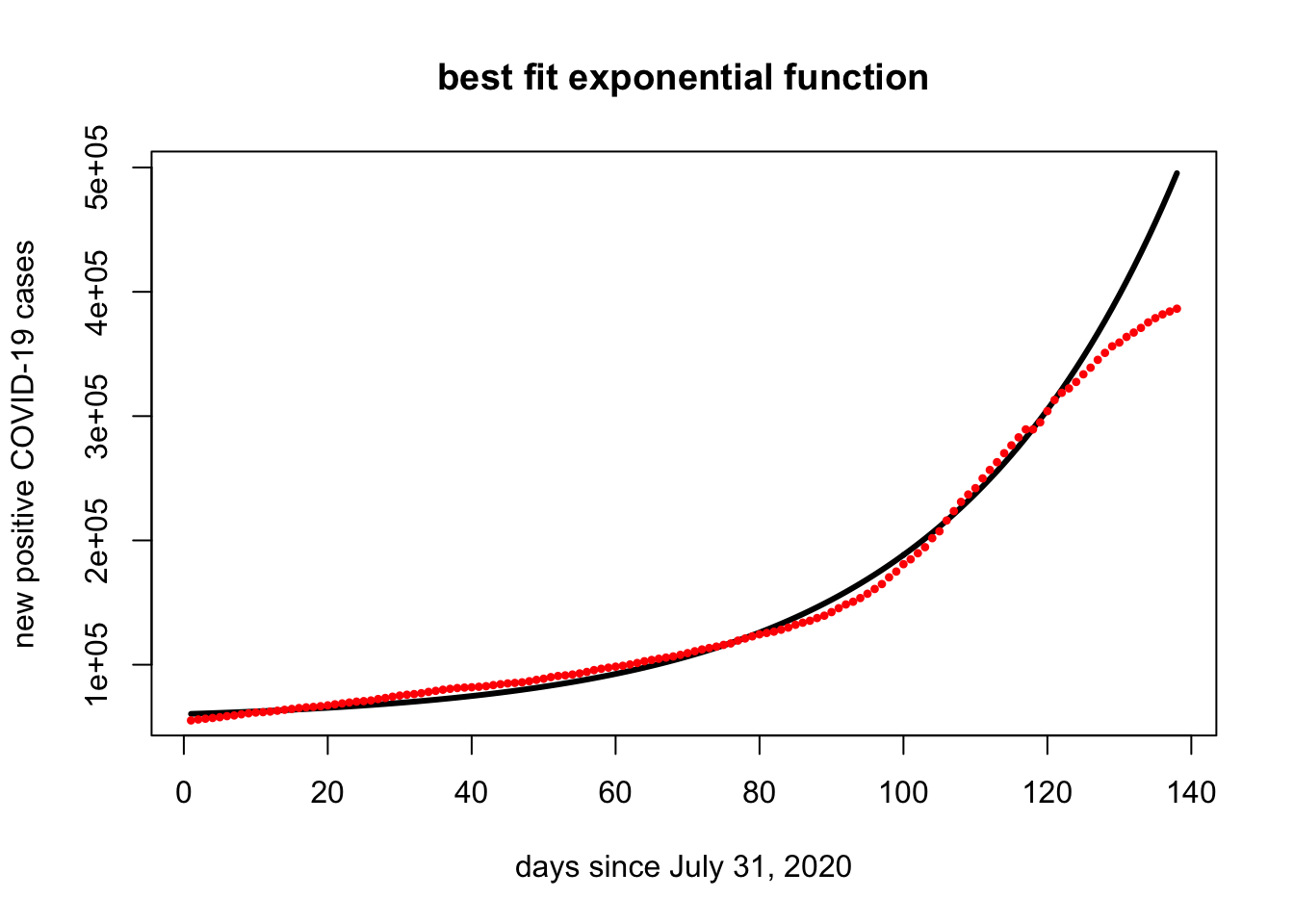

covid.mn = c(725, 1484, 2097, 2699, 3316, 4177, 4722, 5638, 6435, 7053, 7376, 7840, 8530, 9260, 9950, 10689, 11253, 11598, 12155, 12845, 13670, 14404, 15121, 15835, 16244, 16773, 17927, 18777, 19794, 20726, 21401, 21892, 22622, 23660, 24503, 25417, 26124, 26762, 27145, 27405, 27786, 28253, 29125, 29848, 30486, 30888, 31350, 32259, 33344, 34258, 35554, 36479, 36959, 37637, 38549, 39726, 41196, 42271, 43175, 43984, 44671, 45737, 46903, 48324, 49363, 50336, 51277, 52188, 53459, 54849, 56365, 57805, 58976, 60111, 61480, 62643, 64933, 66627, 68349, 69976, 71068, 72128, 73689, 75400, 77659, 79339, 80909, 83073, 84981, 87848, 91002, 94009, 96209, 99157, 102633, 106460, 110402, 115844, 120491, 126399, 130325, 135218, 140107, 147332, 152876, 161565, 169118, 176555, 182486, 187580, 195443, 202237, 208489, 215694, 222037, 228453, 234840, 234840, 240538, 249560, 258506, 264300, 267849, 273014, 279163, 284510, 290818, 296399, 301689, 304740, 309256, 312755, 316505, 320935, 324360, 327378, 329701, 331949)Find the best fitting exponential function \(f(t) = a e^{k t}\) for the number of COVID-19 cases in Minnesota since 31 July, 2020. Here \(t\) is the number of days since 31 July.

Run the following code to plot your function. This code assumes that your least squares solution is given by

xhat. Does it look like a good fit?

30.5.9 One-to-one and Onto

Here is a matrix \(A\) and its reduced row echelon form \(B\) \[ A = \begin{bmatrix} 1 & 3 & -3 & 1 & 0 \\ 2 & 1 & 0 & 6 & 5 \\ 3 & 3 & -3 & 6 & 3 \\ -1 & 4 & -3 & -3 & -1 \end{bmatrix} \qquad \longrightarrow \qquad B = \begin{bmatrix} 1 & 0 & 0 & 2.5 & 1.5 \\ 0 & 1 & 0 & 1.0 & 2.0 \\ 0 & 0 & 1 & 1.5 & 2.5 \\ 0 & 0 & 0 & 0.0 & 0.0 \end{bmatrix}. \]

Find a basis for \(\mbox{Nul}(A)\) and \(\mbox{Col}(A)\).

Is the linear transformation \(T(\mathsf{x}) = A \mathsf{x}\) one-to-one? Onto?

30.5.10 A 4x5 Matrix

\(\mathsf{A}\) is a \(4 \times 5\) matrix and \(b \in \mathbb{R}^4\). The augmented matrix \([\,\mathsf{A}\mid b\,]\) row reduces as shown here. \[ [\,\mathsf{A}\mid b\,] = \left[ \begin{array}{ccccc|c} \vert & \vert & \vert & \vert & \vert &\vert\\ v_1 & v_2 & v_3 & v_4 & v_5 & b\\ \vert & \vert & \vert & \vert & \vert &\vert\\ \end{array} \right] \longrightarrow \left[ \begin{array}{rrrrr|r} 1 & 0 & 2 & 0 & -1 &1\\ 0 & 1 & -1 & 0 & -1 &1\\ 0 & 0 & 0 & 1 & 1 &-2\\ 0 & 0 & 0 & 0 & 0 & 0 \end{array} \right] \] a. Give all of the solutions to o \(\mathsf{A} x = b\) in parametric form.

Give a dependence relation among the columns of \(\mathsf{A}\).

These true-false questions refer to the coefficient matrix \(\mathsf{A}\) above. Decide if the statement is T = True or F = False. No justification necessary.

- \(\mathsf{A} x = b\) has a solution for all \(b \in \mathbb{R}^4\).

- The columns of \(\mathsf{A}\) span \(\mathbb{R}^4\).

- If \(\mathsf{A} x = b\) has a solution, then it has infinitely many solutions.

- The linear transformation \(x \mapsto \mathsf{A} x\) is one-to-one

- The linear transformation \(x \mapsto \mathsf{A} x\) is onto

30.5.11 A x = b

Suppose that \(\mathsf{A}\) is an \(n \times n\) matrix and that \(\vec{\mathsf{x}}_1\) and \(\vec{\mathsf{x}}_2\) are two solutions to \(\mathsf{A}x = \vec{\mathsf{b}}\) with \(\vec{\mathsf{b}}\not= \vec{\mathbf{0}}\) and \(\vec{\mathsf{x}}_1 \not= \vec{\mathsf{x}}_2\).

Give a nonzero solution to \(\mathsf{A}\vec{\mathsf{x}}= \vec{\mathbf{0}}\).

Give a solution to \(\mathsf{A}\vec{\mathsf{x}}= \vec{\mathsf{b}}\) that no one else in the class has.

Decide if the statement is T = True or F = False, or I = there is not enough information to know.

- The equation \(\mathsf{A}x = \vec{\mathsf{y}}\) has a solution for all \(\vec{\mathsf{y}}\in \mathbb{R}^n\)

- \(\lambda = 0\) is an eigenvalue of \(\mathsf{A}\)

- \(\mathsf{A}\) is invertible

- \(\mathsf{A}\) is diagonalizable

30.5.12 Watch this!

The answer to at least one question on Quiz 4 is contained in this video.

30.6 Solutions to Practice Problems

30.6.1

\[\begin{align} \| \mathsf{v} \| &= \sqrt{ \mathsf{v} \cdot \mathsf{v}} = \sqrt{1+1+1} = \sqrt{3} \\ \| \mathsf{w} \| &= \sqrt{ \mathsf{w} \cdot \mathsf{vw}} = \sqrt{25+4+9} = \sqrt{38} \\ \end{align}\]

We have \(\mathsf{v} - \mathsf{w} = \begin{bmatrix} -4 \\ -3 \\ -2 \end{bmatrix}\) and so \[ \| \mathsf{v} - \mathsf{w}\| = \sqrt{16+9+4} = \sqrt{29} \]

\[ \cos \theta = \frac{\mathsf{v} \cdot \mathsf{w}}{\| \mathsf{v} \| \, \|\mathsf{w} \| } = \frac{5-2+3}{\sqrt{3} \, \sqrt{38} } = \frac{2\sqrt{3}}{\sqrt{38} } \]

\[ \hat{\mathsf{w}} = \mbox{proj}_{\mathsf{v}} \mathsf{w} = \frac{\mathsf{v} \cdot \mathsf{w}}{ \mathsf{v} \cdot \mathsf{v} } \, \mathsf{v} = \frac{5-2+3}{1+1+1} \mathsf{v} = 2 \mathsf{v} = \begin{bmatrix} 2 \\ -2 \\ 2 \end{bmatrix} \]

Using \(\hat{\mathsf{w}}\) from the previous problem, we know that \[ \mathsf{z} = \mathsf{w} - \hat{\mathsf{w}} = \begin{bmatrix} 5 \\ 2 \\ 3 \end{bmatrix} - \begin{bmatrix} 2 \\ -2 \\ 2 \end{bmatrix} = \begin{bmatrix} 3 \\ 4 \\ 1 \end{bmatrix} \] is orthogonal to \(\mathsf{v}\).So an orthonormal basis is \[ \frac{1}{\sqrt{3}} \begin{bmatrix} 1 \\ -1 \\ 1 \end{bmatrix} \quad \mbox{and} \quad \frac{1}{\sqrt{26}} \begin{bmatrix} 3 \\ 4 \\ 1 \end{bmatrix} \]

30.6.2

Here are a few ways to describe \(\mbox{ker}(T)\).

\(\mbox{ker}(T) = \{ \mathsf{x} \in \mathbb{R}^n \mid \mathsf{x} \cdot \mathsf{u} = 0 \}\).

\(\mbox{ker}(T)\) is the set of vectors that are orthogonal to \(\mathsf{u}\).

Let \(A\) be the \(1 \times n\) matrix \(\mathsf{u}^{\top}\). Then \(\mbox{ker}(T)= \mbox{Nul}(A)\).

30.6.3

We have \(\mathsf{u}_1 \cdot \mathsf{v} = 2-2-4+2=-2\) and \(\mathsf{u}_1 \cdot \mathsf{v} = 2-2+4-2=2\) so \[ \hat{\mathsf{v}} = \mbox{proj}_W \mathsf{v} = - \mathsf{u}_1 + \mathsf{u}_2 = -\frac{1}{2} \begin{bmatrix} 1 \\ -1 \\ -1 \\ 1 \end{bmatrix} + \frac{1}{2} \begin{bmatrix} 1 \\ -1 \\ 1 \\ -1 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \\ 1 \\ -1 \end{bmatrix} \] with residual vector \[ \mathsf{z} = \mathsf{v} - \hat{\mathsf{v}} = \begin{bmatrix} 2 \\ 2 \\ 4 \\ 2 \end{bmatrix} - \begin{bmatrix} 0 \\ 0 \\ 1 \\ -1 \end{bmatrix} = \begin{bmatrix} 2 \\ 2 \\ 3 \\ 3 \end{bmatrix} \] and the distance is \(\| \mathsf{z} \| = \sqrt{4+4+9+9} = \sqrt{26}\).

30.6.4

\(\mathsf{v}_1 \cdot \mathsf{v}_2 = 1 +2 - 3 =0\).

We must find \(\mbox{Nul}(A)\) where \(A = \begin{bmatrix} \mathsf{v}_1^{\top} \\ \mathsf{v}_2^{\top}\end{bmatrix}\).

\[ \begin{bmatrix} 1 & 1 & -1 \\ 1 & 2 & 3 \end{bmatrix} \longrightarrow \begin{bmatrix} 1 & 1 & -1 \\ 0 & 1 & 4 \end{bmatrix} \longrightarrow \begin{bmatrix} 1 & 0 & -5 \\ 0 & 1 & 4 \end{bmatrix} \] so the vector \(\begin{bmatrix} 5 \\ -4 \\ 1 \end{bmatrix}\) is a basis for \(W^{\perp}\)

- We have \[\begin{align} \hat{\mathsf{y}} &= \frac{\mathsf{y} \cdot \mathsf{v_1}}{\mathsf{v_1} \cdot \mathsf{v_1}} \, \mathsf{v_1} + \frac{\mathsf{y} \cdot \mathsf{v_2}}{\mathsf{v_2} \cdot \mathsf{v_2}} \, \mathsf{v_2} = \frac{8-2}{1+1+1} \mathsf{v_1} + \frac{8+6}{1+4+9} \mathsf{v_2} \\ &= 2\mathsf{v_1} +\mathsf{v_2} = \begin{bmatrix} 2 \\ 2 \\ -2 \end{bmatrix} + \begin{bmatrix} 1 \\ 2 \\ 3 \end{bmatrix} = \begin{bmatrix} 3 \\ 4 \\ 1 \end{bmatrix} \end{align}\] and so \[ \mathsf{z} = \mathsf{y} - \hat{\mathsf{y}} = \begin{bmatrix} 8 \\ 0 \\ 2 \end{bmatrix} - \begin{bmatrix} 3 \\ 4 \\ 1 \end{bmatrix} = \begin{bmatrix} 5 \\ -4 \\ 1 \end{bmatrix}. \]

30.6.5

a .We will answer this one using RStudio.

## [,1] [,2] [,3] [,4]

## [1,] 1 0 0 0

## [2,] 0 1 0 0

## [3,] 0 0 1 0

## [4,] 0 0 0 1

## [5,] 0 0 0 0So we need all four vectors to span the column space.

- We obtain a basis for \(W^{\perp}\) by finding \(\mbox{Nul(A^{\top})}\) So let’s row reduce \(A^{\top}\)

## [,1] [,2] [,3] [,4] [,5]

## [1,] 1 0 0 0 -2

## [2,] 0 1 0 0 2

## [3,] 0 0 1 0 7

## [4,] 0 0 0 1 -2The vector \(\begin{bmatrix} 2 \\ -2 \\ -7 \\ 2 \\ 1\end{bmatrix}\) spans \(W^{\perp}\)

30.6.6

## b

## [1,] 1 0 0 0

## [2,] 0 1 0 0

## [3,] 0 0 1 0

## [4,] 0 0 0 1There is a pivot in the last column of this augmented matrix, so this system is inconsistent.

Here is the least squares calculation.

## [,1]

## [1,] 2

## [2,] -1

## [3,] 1## [,1]

## [1,] 2.000000e+00

## [2,] -1.000000e+00

## [3,] 6.661338e-16

## [4,] 1.000000e+00## [,1]

## [1,] 2

## [2,] 2

## [3,] -2

## [4,] -2## [,1]

## [1,] -8.881784e-16

## [2,] 0.000000e+00

## [3,] 0.000000e+00## [,1]

## [1,] 4The projection is \(\hat{\mathsf{b}} = [2,-1,0,1]^{\top}\). The residual is \(\mathsf{z} = [2,2,-2,-2]^{\top}\)

- The distance of between \(\mathsf{b}\) and \(\hat{\mathsf{b}}\) is \[ \| = \| \mathsf{z} \| = \sqrt{4+4+4+4} = \sqrt{16} = 4. \]

30.6.7

Can be done by hand or by R:

## [,1] [,2]

## [1,] 3 3

## [2,] 3 9## [,1]

## [1,] 6

## [2,] 24- The normal equations are \[ \begin{bmatrix} 3 & 3 \\ 3 & 9 \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} 6 \\ 24 \end{bmatrix} \]

- The least squares solution is

## [,1]

## [1,] -1

## [2,] 3- How close?

## [,1]

## [1,] 2## [,1]

## [1,] 1.41421430.6.8

## [,1]

## 8.66588980

## x 0.03138331a = exp(xhat[1])

k = xhat[2]

f=function(y){a * exp(k*(y))}

plot(x,f(x)+ covid.start,type="l",lwd=3,ylab="new positive COVID-19 cases", xlab="days since July 31, 2020", main="best fit exponential function")

points(x,covid.mn + covid.start,pch=20,cex=.7,col="red")

The curve is a pretty good fit (unfortunately?). However, it does look like the additional restrictions of the last two weeks are slowing the COVID spread.

30.6.9

4x5 matrix: solution coming

30.6.10

Ax = b: solution coming

30.6.11

I hope you enjoyed the video.